The Network page displays round-trip latencies between all nodes in your cluster. This represents the time required to transmit a packet across a network, and is highly dependent on your network topology. Use this page to determine whether your latency is appropriate for your topology pattern, and to identify nodes with unexpected latencies or connectivity issues.

To view this page, access the DB Console and click Network in the left-hand navigation.

Sort and filter network latencies

Use the Sort By menu to arrange the network matrix by locality (e.g., cloud, region, availability zone, datacenter).

Use the Filter menu to select specific nodes or localities to view.

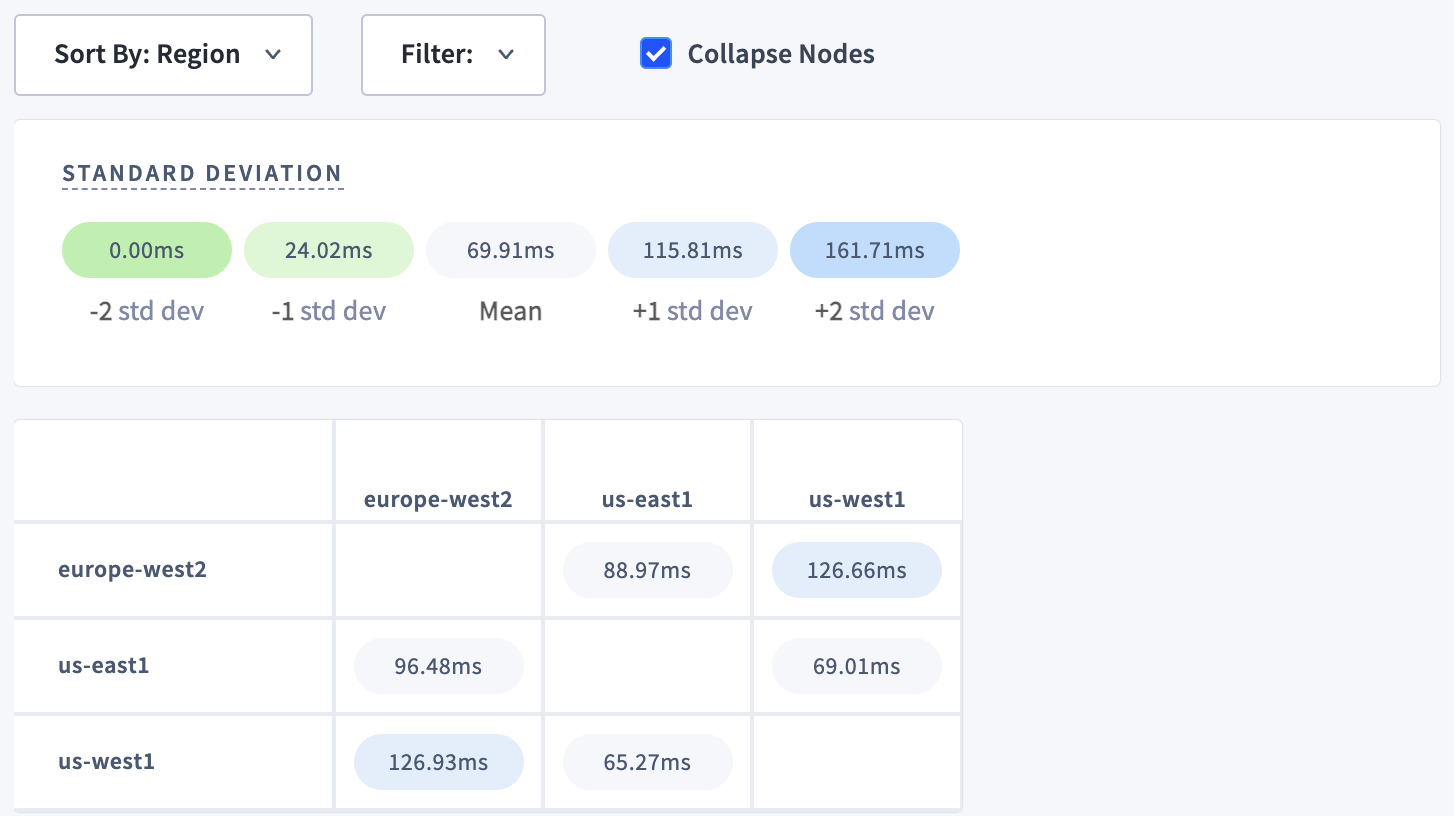

Select Collapse Nodes to display the mean latencies of each locality, depending on how the matrix is sorted. This is a way to quickly assess cross-regional or cross-cloud latency.

Interpret the network matrix

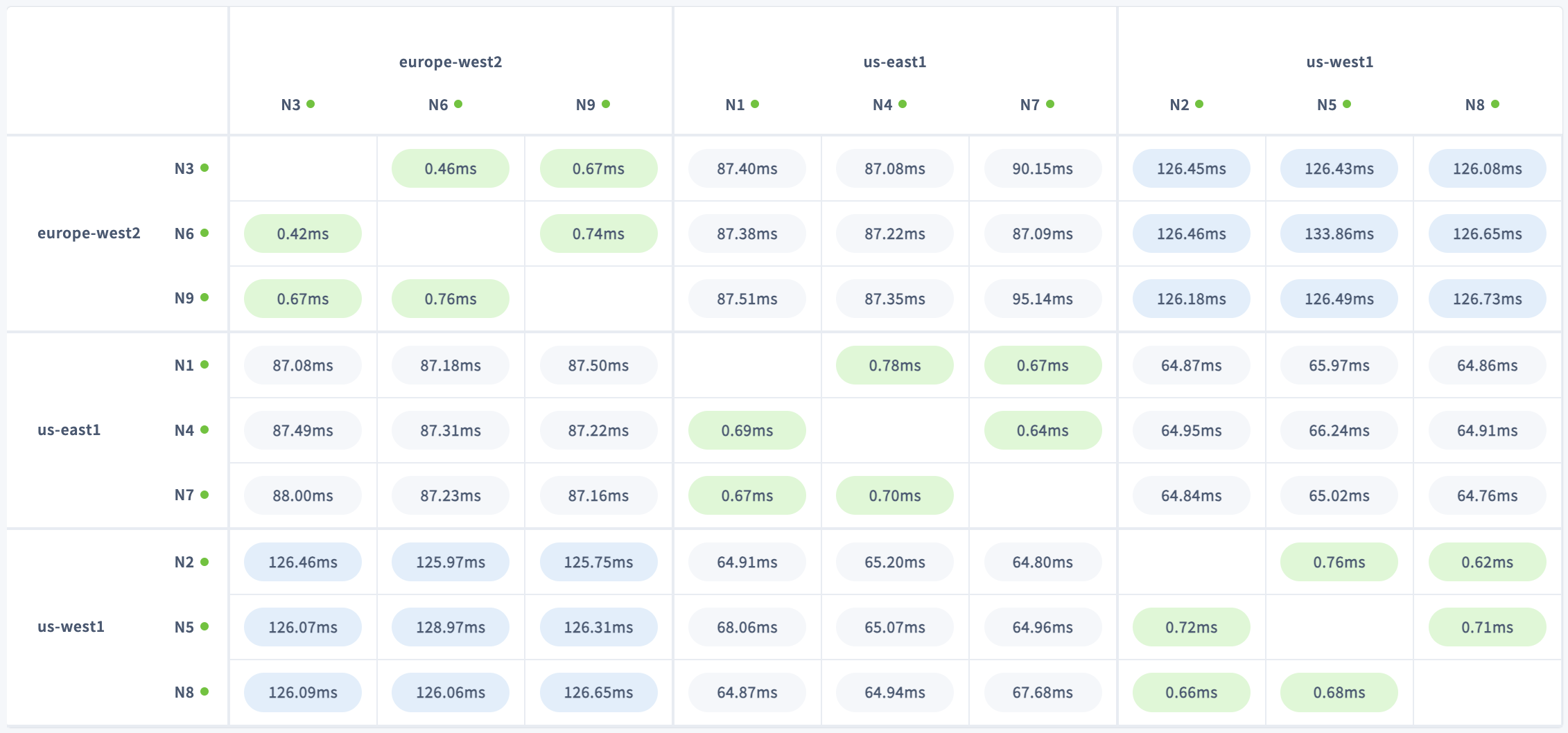

Each cell in the matrix displays the round-trip latency in milliseconds between two nodes in your cluster. Round-trip latency includes the return time of a packet. Latencies are color-coded by their standard deviation from the mean latency on the network: green for lower values, and blue for higher. Nodes with the lowest latency are displayed in darker green, and nodes with the highest latency are displayed in darker blue.

Rows represent origin nodes, and columns represent destination nodes. Hover over a cell to display more details:

- The direction of the connection marked by

FromandTo. - Locality metadata for origin and destination.q

- Round-trip latency.

The page automatically refreshes every 30 seconds to show the most recent information.

On a typical multi-region cluster, you can expect much lower latencies between nodes in the same region/availability zone. Nodes in different regions/availability zones, meanwhile, will experience higher latencies that reflect their geographical distribution.

For instance, the cluster shown above has nodes in us-west1, us-east1, and europe-west2. Latencies are highest between nodes in us-west1 and europe-west2, which span the greatest distance. This is especially clear when sorting by region or availability zone and collapsing nodes:

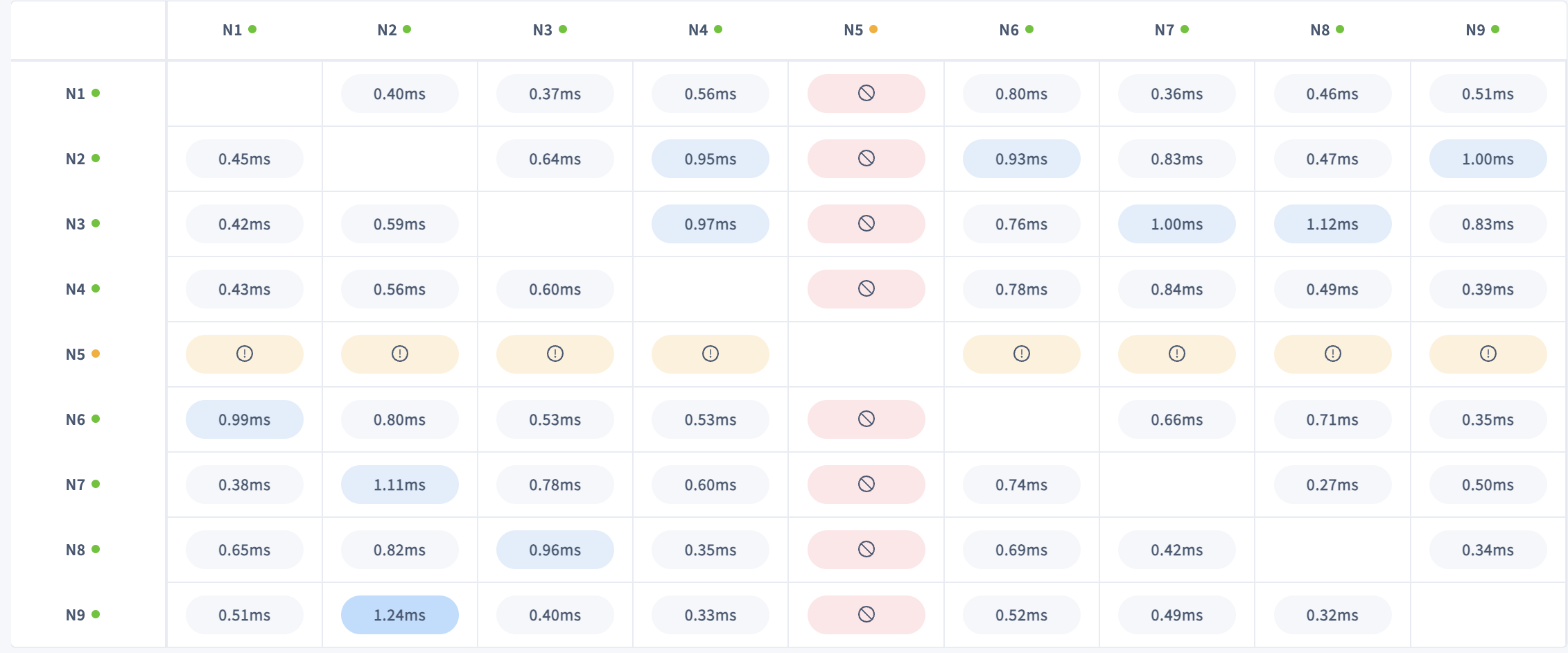

No connections

Nodes that have completely lost connectivity are color-coded depending on connection status:

- Orange with an exclamation mark indicates

unknown connection state. - Red with a no symbol indicates

Failed connection.

This information can help you diagnose a network partition in your cluster.

Hover over a cell to display more details:

- The direction of the connection marked by

FromandTo. - Locality metadata for origin and destination.

- Connection status.

- The error message that resulted from the most recent connection attempt.

This specific information can help you understand the root cause of the connectivity issue.

A network partition occurs when two or more nodes are prevented from communicating with each other in one or both directions. A network partition can be caused by a network outage or a configuration problem with the network, such as when allowlisted IP addresses or hostnames change after a node is torn down and rebuilt. In a symmetric partition, node communication is disrupted in both directions. In an asymmetric partition, nodes can communicate in one direction but not the other.

The effect of a network partition depends on which nodes are partitioned, where the ranges are located, and to a large extent, whether localities are defined. If localities are not defined, a partition that cuts off at least (n-1)/2 nodes will cause data unavailability.

Node liveness status

Hover over a node's ID in the row and column headers to show the node's liveness status, such as healthy or suspect. Node liveness status is also indicated by the colored circle next to the Node ID: green for healthy or red for suspect.

If a suspect node stays offline for the duration set by server.time_until_store_dead (5 minutes by default), the cluster considers the node "dead" and the node is removed from the matrix.

The number of LIVE (healthy), SUSPECT, DRAINING and DEAD nodes is displayed under Node Status on the Cluster Overview page.

Topology fundamentals

- Multi-region topology patterns are almost always table-specific. If you haven't already, review the full range of patterns to ensure you choose the right one for each of your tables.

- Review how data is replicated and distributed across a cluster, and how this affects performance. It is especially important to understand the concept of the "leaseholder". For a summary, see Reads and Writes in CockroachDB. For a deeper dive, see the CockroachDB Architecture Overview.

- Review the concept of locality, which CockroachDB uses to place and balance data based on how you define replication controls.

- Review the recommendations and requirements in our Production Checklist.

- This topology doesn't account for hardware specifications, so be sure to follow our hardware recommendations and perform a POC to size hardware for your use case. For optimal cluster performance, Cockroach Labs recommends that all nodes use the same hardware and operating system.

- Adopt relevant SQL Best Practices to ensure optimal performance.

Network latency limits the performance of individual operations. You can use the Statements page to see the latencies of SQL statements on gateway nodes.