This page provides best practices for optimizing query performance in CockroachDB.

DML best practices

Use multi-row statements instead of multiple single-row statements

For INSERT, UPSERT, and DELETE statements, a single multi-row statement is faster than multiple single-row statements. Whenever possible, use multi-row statements for DML queries instead of multiple single-row statements.

For more information, see:

Use UPSERT instead of INSERT ON CONFLICT on tables with no secondary indexes

When inserting/updating all columns of a table, and the table has no secondary

indexes, Cockroach Labs recommends using an UPSERT statement instead of the

equivalent INSERT ON CONFLICT statement. Whereas INSERT ON

CONFLICT always performs a read to determine the necessary writes, the UPSERT

statement writes without reading, making it faster. For tables with secondary

indexes, there is no performance difference between UPSERT and INSERT ON

CONFLICT.

This issue is particularly relevant when using a simple SQL table of two columns

to simulate direct KV access.

In this case, be sure to use the UPSERT statement.

Bulk-insert best practices

Use multi-row INSERT statements for bulk-inserts into existing tables

To bulk-insert data into an existing table, batch multiple rows in one multi-row INSERT statement. Experimentally determine the optimal batch size for your application by monitoring the performance for different batch sizes (10 rows, 100 rows, 1000 rows). Do not include bulk INSERT statements within an explicit transaction.

You can also use the IMPORT INTO statement to bulk-insert CSV data into an existing table.

For more information, see Insert Multiple Rows.

Large multi-row INSERT queries can lead to long-running transactions that result in transaction retry errors. If a multi-row INSERT query results in an error code 40001 with the message "transaction deadline exceeded", we recommend breaking up the query up into smaller batches of rows.

Use IMPORT instead of INSERT for bulk-inserts into new tables

To bulk-insert data into a brand new table, the IMPORT statement performs better than INSERT.

Bulk-update best practices

Use batch updates to delete a large number of rows

To delete a large number of rows, we recommend iteratively deleting batches of rows until all of the unwanted rows are deleted. For an example, see Bulk-update Data.

Bulk-delete best practices

Use TRUNCATE instead of DELETE to delete all rows in a table

The TRUNCATE statement removes all rows from a table by dropping the table and recreating a new table with the same name. This performs better than using DELETE, which performs multiple transactions to delete all rows.

Use batch deletes to delete a large number of rows

To delete a large number of rows, we recommend iteratively deleting batches of rows until all of the unwanted rows are deleted. For an example, see Bulk-delete Data.

Batch delete "expired" data

New in v22.1: CockroachDB has preview support for Time to Live ("TTL") expiration on table rows, also known as Row-Level TTL. Row-Level TTL is a mechanism whereby rows from a table are considered "expired" and can be automatically deleted once those rows have been stored longer than a specified expiration time.

For more information, see Batch delete expired data with Row-Level TTL.

Assign column families

A column family is a group of columns in a table that is stored as a single key-value pair in the underlying key-value store.

When a table is created, all columns are stored as a single column family. This default approach ensures efficient key-value storage and performance in most cases. However, when frequently updated columns are grouped with seldom updated columns, the seldom updated columns are nonetheless rewritten on every update. Especially when the seldom updated columns are large, it's therefore more performant to assign them to a distinct column family.

Unique ID best practices

The best practices for generating unique IDs in a distributed database like CockroachDB are very different than for a legacy single-node database. Traditional approaches for generating unique IDs for legacy single-node databases include:

- Using the

SERIALpseudo-type for a column to generate random unique IDs. This can result in a performance bottleneck because IDs generated temporally near each other have similar values and are located physically near each other in a table's storage. - Generating monotonically increasing

INTIDs by using transactions with roundtripSELECTs, e.g.,INSERT INTO tbl (id, …) VALUES ((SELECT max(id)+1 FROM tbl), …). This has a very high performance cost since it makes allINSERTtransactions wait for their turn to insert the next ID. You should only do this if your application really does require strict ID ordering. In some cases, using Change Data Capture (CDC) can help avoid the requirement for strict ID ordering. If you can avoid the requirement for strict ID ordering, you can use one of the higher performance ID strategies outlined below.

The approaches described above are likely to create hot spots for both reads and writes in CockroachDB. To avoid this issue, we recommend the following approaches (listed in order from best to worst performance).

| Approach | Pros | Cons |

|---|---|---|

| 1. Use multi-column primary keys | Potentially fastest, if done right | Complex, requires up-front design and testing to ensure performance |

2. Use UUID to generate unique IDs |

Good performance; spreads load well; easy choice | May leave some performance on the table; requires other columns to be useful in queries |

3. Use INSERT with the RETURNING clause |

Easy to query against; familiar design | Slower performance than the other options; higher chance of transaction contention |

Use multi-column primary keys

A well-designed multi-column primary key can yield even better performance than a UUID primary key, but it requires more up-front schema design work. To get the best performance, ensure that any monotonically increasing field is located after the first column of the primary key. When done right, such a composite primary key should result in:

- Enough randomness in your primary key to spread the table data / query load relatively evenly across the cluster, which will avoid hot spots. By "enough randomness" we mean that the prefix of the primary key should be relatively uniformly distributed over its domain. Its domain should have at least as many elements as you have nodes.

- A monotonically increasing column that is part of the primary key (and thus indexed) which is also useful in your queries.

For example, consider a social media website. Social media posts are written by users, and on login the user's last 10 posts are displayed. A good choice for a primary key might be (username, post_timestamp). For example:

> CREATE TABLE posts (

username STRING,

post_timestamp TIMESTAMP,

post_id INT,

post_content STRING,

CONSTRAINT posts_pk PRIMARY KEY(username, post_timestamp)

);

This would make the following query efficient.

> SELECT * FROM posts

WHERE username = 'alyssa'

ORDER BY post_timestamp DESC

LIMIT 10;

username | post_timestamp | post_id | post_content

+----------+---------------------------+---------+--------------+

alyssa | 2019-07-31 18:01:00+00:00 | ... | ...

alyssa | 2019-07-30 10:22:00+00:00 | ... | ...

alyssa | 2019-07-30 09:12:00+00:00 | ... | ...

alyssa | 2019-07-29 13:48:00+00:00 | ... | ...

alyssa | 2019-07-29 13:47:00+00:00 | ... | ...

alyssa | 2019-07-29 13:46:00+00:00 | ... | ...

alyssa | 2019-07-29 13:43:00+00:00 | ... | ...

...

Time: 924µs

To see why, let's look at the EXPLAIN output. It shows that the query is fast because it does a point lookup on the indexed column username (as shown by the line spans | /"alyssa"-...). Furthermore, the column post_timestamp is already in an index, and sorted (since it's a monotonically increasing part of the primary key).

> EXPLAIN (VERBOSE)

SELECT * FROM posts

WHERE username = 'alyssa'

ORDER BY post_timestamp DESC

LIMIT 10;

info

----------------------------------------------------------------

distribution: local

vectorized: true

• revscan

columns: (username, post_timestamp, post_id, post_content)

ordering: -post_timestamp

estimated row count: 10 (missing stats)

table: posts@posts_pk

spans: /"alyssa"-/"alyssa"/PrefixEnd

limit: 10

(10 rows)

Time: 1ms total (execution 1ms / network 0ms)

Note that the above query also follows the indexing best practice of indexing all columns in the WHERE clause.

Use UUID to generate unique IDs

To auto-generate unique row identifiers, use the UUID column with the gen_random_uuid() function as the default value:

> CREATE TABLE users (

id UUID NOT NULL DEFAULT gen_random_uuid(),

city STRING NOT NULL,

name STRING NULL,

address STRING NULL,

credit_card STRING NULL,

CONSTRAINT "primary" PRIMARY KEY (city ASC, id ASC),

FAMILY "primary" (id, city, name, address, credit_card)

);

> INSERT INTO users (name, city) VALUES ('Petee', 'new york'), ('Eric', 'seattle'), ('Dan', 'seattle');

> SELECT * FROM users;

id | city | name | address | credit_card

+--------------------------------------+----------+-------+---------+-------------+

cf8ee4e2-cd74-449a-b6e6-a0fb2017baa4 | new york | Petee | NULL | NULL

2382564e-702f-42d9-a139-b6df535ae00a | seattle | Eric | NULL | NULL

7d27e40b-263a-4891-b29b-d59135e55650 | seattle | Dan | NULL | NULL

(3 rows)

Alternatively, you can use the BYTES column with the uuid_v4() function as the default value instead:

> CREATE TABLE users2 (

id BYTES DEFAULT uuid_v4(),

city STRING NOT NULL,

name STRING NULL,

address STRING NULL,

credit_card STRING NULL,

CONSTRAINT "primary" PRIMARY KEY (city ASC, id ASC),

FAMILY "primary" (id, city, name, address, credit_card)

);

> INSERT INTO users2 (name, city) VALUES ('Anna', 'new york'), ('Jonah', 'seattle'), ('Terry', 'chicago');

> SELECT * FROM users;

id | city | name | address | credit_card

+------------------------------------------------+----------+-------+---------+-------------+

4\244\277\323/\261M\007\213\275*\0060\346\025z | chicago | Terry | NULL | NULL

\273*t=u.F\010\274f/}\313\332\373a | new york | Anna | NULL | NULL

\004\\\364nP\024L)\252\364\222r$\274O0 | seattle | Jonah | NULL | NULL

(3 rows)

In either case, generated IDs will be 128-bit, large enough for there to be virtually no chance of generating non-unique values. Also, once the table grows beyond a single key-value range (more than 512 MiB by default), new IDs will be scattered across all of the table's ranges and, therefore, likely across different nodes. This means that multiple nodes will share in the load.

This approach has the disadvantage of creating a primary key that may not be useful in a query directly, which can require a join with another table or a secondary index.

If it is important for generated IDs to be stored in the same key-value range, you can use an integer type with the unique_rowid() function as the default value, either explicitly or via the SERIAL pseudo-type:

> CREATE TABLE users3 (

id INT DEFAULT unique_rowid(),

city STRING NOT NULL,

name STRING NULL,

address STRING NULL,

credit_card STRING NULL,

CONSTRAINT "primary" PRIMARY KEY (city ASC, id ASC),

FAMILY "primary" (id, city, name, address, credit_card)

);

> INSERT INTO users3 (name, city) VALUES ('Blake', 'chicago'), ('Hannah', 'seattle'), ('Bobby', 'seattle');

> SELECT * FROM users3;

id | city | name | address | credit_card

+--------------------+---------+--------+---------+-------------+

469048192112197633 | chicago | Blake | NULL | NULL

469048192112263169 | seattle | Hannah | NULL | NULL

469048192112295937 | seattle | Bobby | NULL | NULL

(3 rows)

Upon insert or upsert, the unique_rowid() function generates a default value from the timestamp and ID of the node executing the insert. Such time-ordered values are likely to be globally unique except in cases where a very large number of IDs (100,000+) are generated per node per second. Also, there can be gaps and the order is not completely guaranteed.

For further background on UUIDs, see What is a UUID, and Why Should You Care?.

Use INSERT with the RETURNING clause to generate unique IDs

If something prevents you from using multi-column primary keys or UUIDs to generate unique IDs, you might resort to using INSERTs with SELECTs to return IDs. Instead, use the RETURNING clause with the INSERT statement as shown below for improved performance.

Generate monotonically-increasing unique IDs

Suppose the table schema is as follows:

> CREATE TABLE X (

ID1 INT,

ID2 INT,

ID3 INT DEFAULT 1,

PRIMARY KEY (ID1,ID2)

);

The common approach would be to use a transaction with an INSERT followed by a SELECT:

> BEGIN;

> INSERT INTO X VALUES (1,1,1)

ON CONFLICT (ID1,ID2)

DO UPDATE SET ID3=X.ID3+1;

> SELECT * FROM X WHERE ID1=1 AND ID2=1;

> COMMIT;

However, the performance best practice is to use a RETURNING clause with INSERT instead of the transaction:

> INSERT INTO X VALUES (1,1,1),(2,2,2),(3,3,3)

ON CONFLICT (ID1,ID2)

DO UPDATE SET ID3=X.ID3 + 1

RETURNING ID1,ID2,ID3;

Generate random unique IDs

Suppose the table schema is as follows:

> CREATE TABLE X (

ID1 INT,

ID2 INT,

ID3 INT DEFAULT unique_rowid(),

PRIMARY KEY (ID1,ID2)

);

The common approach to generate random Unique IDs is a transaction using a SELECT statement:

> BEGIN;

> INSERT INTO X VALUES (1,1);

> SELECT * FROM X WHERE ID1=1 AND ID2=1;

> COMMIT;

However, the performance best practice is to use a RETURNING clause with INSERT instead of the transaction:

> INSERT INTO X VALUES (1,1),(2,2),(3,3)

RETURNING ID1,ID2,ID3;

Secondary index best practices

See Secondary Index Best Practices.

Join best practices

See Join Performance Best Practices.

Subquery best practices

See Subquery Performance Best Practices.

Authorization best practices

See Authorization Best Practices.

Table scan best practices

Avoid SELECT * for large tables

For large tables, avoid table scans (that is, reading the entire table data) whenever possible. Instead, define the required fields in a SELECT statement.

Example

Suppose the table schema is as follows:

> CREATE TABLE accounts (

id INT,

customer STRING,

address STRING,

balance INT

nominee STRING

);

Now if we want to find the account balances of all customers, an inefficient table scan would be:

> SELECT * FROM ACCOUNTS;

This query retrieves all data stored in the table. A more efficient query would be:

> SELECT CUSTOMER, BALANCE FROM ACCOUNTS;

This query returns the account balances of the customers.

Avoid SELECT DISTINCT for large tables

SELECT DISTINCT allows you to obtain unique entries from a query by removing duplicate entries. However, SELECT DISTINCT is computationally expensive. As a performance best practice, use SELECT with the WHERE clause instead.

Use AS OF SYSTEM TIME to decrease conflicts with long-running queries

If you have long-running queries (such as analytics queries that perform full table scans) that can tolerate slightly out-of-date reads, consider using the ... AS OF SYSTEM TIME clause. Using this, your query returns data as it appeared at a distinct point in the past and will not cause conflicts with other concurrent transactions, which can increase your application's performance.

However, because AS OF SYSTEM TIME returns historical data, your reads might be stale.

Hot spots

A hot spot is any location on the cluster receiving significantly more requests than another. Hot spots can cause problems as requests increase.

They commonly occur with transactions that operate on the same range but different index keys, which are limited by the overall hardware capacity of the range leaseholder node.

A hot spot can occur on a range that is indexed on a column of data that is sequential in nature (e.g., an ordered sequence, or a series of increasing, non-repeating TIMESTAMPs), such that all incoming writes to the range will be the last (or first) item in the index and appended to the end of the range. Because the system is unable to find a split point in the range that evenly divides the traffic, the range cannot benefit from load-based splitting. This creates a hot spot at the single range.

Read hot spots can occur if you perform lots of scans of a portion of a table index or a single key.

Find hot spots

To track down the nodes experiencing hot spots, use the Hot Ranges page and Range Report.

Reduce hot spots

To reduce hot spots:

Use index keys with a random distribution of values, so that transactions over different rows are more likely to operate on separate data ranges. See the SQL FAQs on row IDs for suggestions.

Place parts of the records that are modified by different transactions in different tables. That is, increase normalization. However, there are benefits and drawbacks to increasing normalization.

Benefits:

- Allows separate transactions to modify related underlying data without causing contention.

- Can improve performance for read-heavy workloads.

Drawbacks:

- More complex data model.

- Increases the chance of data inconsistency.

- Increases data redundancy.

- Can degrade performance for write-heavy workloads.

If the application strictly requires operating on very few different index keys, consider using

ALTER ... SPLIT ATso that each index key can be served by a separate group of nodes in the cluster.If you are working with a table that must be indexed on sequential keys, consider using hash-sharded indexes. For details about the mechanics and performance improvements of hash-sharded indexes in CockroachDB, see the blog post Hash Sharded Indexes Unlock Linear Scaling for Sequential Workloads. As part of this, we recommend doing thorough performance testing with and without hash-sharded indexes to see which works best for your application.

To avoid read hot spots:

- Increase data distribution, which will allow for more ranges. The hot spot exists because the data being accessed is all co-located in one range.

Increase load balancing across more nodes in the same range. Most transactional reads must go to the leaseholder in CockroachDB, which means that opportunities for load balancing over replicas are minimal.

However, the following features do permit load balancing over replicas:

- Global tables

- Follower reads (both the bounded staleness and the exact staleness kinds)

In these cases, more replicas will help, up to the number of nodes in the cluster. They all only help with reads, and they all come with their own tradeoffs.

Transaction contention

Transactions that operate on the same index key values (specifically, that operate on the same column family for a given index key) are strictly serialized to obey transaction isolation semantics. To maintain this isolation, writing transactions "lock" rows to prevent hazardous interactions with concurrent transactions. However, locking can lead to processing delays if multiple transactions are trying to access the same "locked" data at the same time. This is referred to as transaction (or lock) contention.

Transaction contention occurs when the following three conditions are met:

- There are multiple concurrent transactions or statements (sent by multiple clients connected simultaneously to a single CockroachDB cluster).

- They operate on table rows with the same index key values (either on primary keys or secondary indexes).

- At least one of the transactions modify the data.

Transactions that experience contention typically show delays in completion or restart transaction errors with the error code 40001. The possibility of transaction restarts requires clients to implement transaction retries.

For further background on transaction contention, see What is Database Contention, and Why Should You Care?.

Indicators your application is experiencing transaction contention

- Your application is experiencing degraded performance with transaction errors like

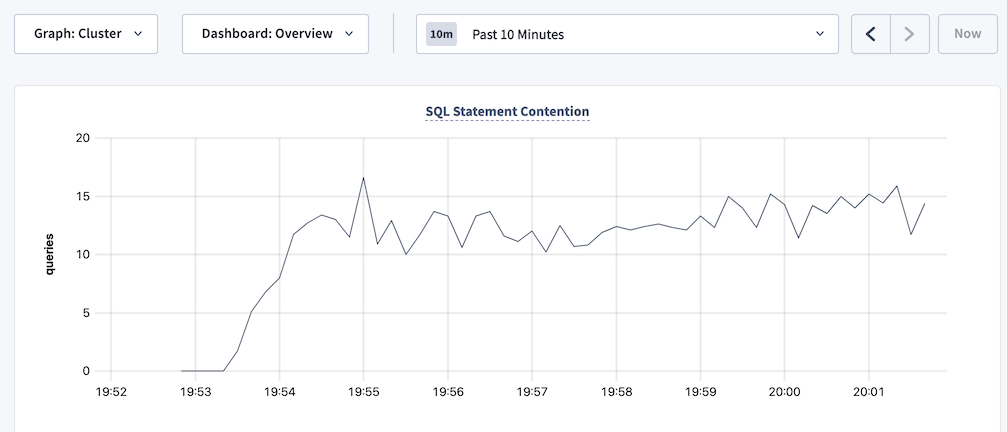

SQLSTATE: 40001,RETRY_WRITE_TOO_OLD, andRETRY_SERIALIZABLE. See Transaction Retry Error Reference. - The SQL Statement Contention graph is showing spikes over time.

- The Transaction Restarts graph is showing spikes in retries over time.

Find transaction contention

Find the transactions and statements within the transactions that are experiencing contention. CockroachDB has several tools to help you track down such transactions and statements:

- In DB Console, visit the Transactions and Statements pages and sort transactions and statements by contention.

Query the following tables:

crdb_internal.cluster_contended_indexesandcrdb_internal.cluster_contended_tablestables for your database to find the indexes and tables that are experiencing contention.crdb_internal.cluster_locksto find out which transactions are holding locks on which objects.crdb_internal.cluster_contention_eventsto view the tables/indexes with the most time under contention.

After you identify the transactions or statements that are causing contention, follow the steps in the next section to avoid contention.

If you experience a hanging or stuck query that is not showing up in the list of contended transactions and statements on the Transactions or Statements pages in the DB Console, the process described above will not work. You will need to follow the process described in Hanging or stuck queries instead.

Reduce transaction contention

To reduce transaction contention:

Make transactions smaller, so that each transaction has less work to do. In particular, avoid multiple client-server exchanges per transaction. For example, use common table expressions to group multiple

SELECTandINSERT,UPDATE,DELETE, andUPSERTclauses together in a single SQL statement.- For an example showing how to break up large transactions in an application, see Break up large transactions into smaller units of work.

- If you are experiencing contention (retries) when doing bulk deletes, see Bulk-delete data.

Send all of the statements in your transaction in a single batch so that CockroachDB can automatically retry the transaction for you.

Use the

SELECT FOR UPDATEstatement in scenarios where a transaction performs a read and then updates the row(s) it just read. The statement orders transactions by controlling concurrent access to one or more rows of a table. It works by locking the rows returned by a selection query, such that other transactions trying to access those rows are forced to wait for the transaction that locked the rows to finish. These other transactions are effectively put into a queue that is ordered based on when they try to read the value of the locked row(s).When replacing values in a row, use

UPSERTand specify values for all columns in the inserted rows. This will usually have the best performance under contention, compared to combinations ofSELECT,INSERT, andUPDATE.

Improve transaction performance by sizing and configuring the cluster

To maximize transaction performance, you'll need to maximize the performance of a single range. To achieve this, you can apply multiple strategies:

- Minimize the network distance between the replicas of a range, possibly using zone configs and partitioning, or the newer Multi-region SQL capabilities.

- Use the fastest storage devices available.

- If the contending transactions operate on different keys within the same range, add more CPU power (more cores) per node. However, if the transactions all operate on the same key, this may not provide an improvement.

See also

- If you aren't sure whether SQL query performance needs to be improved on your cluster, see Identify slow queries.

- For deployment and data location techniques to minimize network latency in multi-region clusters, see Topology Patterns.

- To read more about SQL best practices, see our SQL Performance Best Practices blog post.