This page has instructions for setting up AWS Database Migration Service (DMS) to migrate data to CockroachDB from an existing, publicly hosted database containing application data such as MySQL, Oracle, or PostgreSQL.

For a detailed tutorial about using AWS DMS and information about specific migration tasks, see the AWS DMS documentation site.

This feature is in preview. This feature is subject to change. To share feedback and/or issues, contact Support.

For any issues related to AWS DMS, aside from its interaction with CockroachDB as a migration target, contact AWS Support.

Using CockroachDB as a source database within AWS DMS is unsupported.

Before you begin

Complete the following items before starting this tutorial:

- Configure a replication instance in AWS.

- Configure a source endpoint in AWS pointing to your source database.

Ensure you have a secure, publicly available CockroachDB cluster running the latest v22.1 production release.

If your CockroachDB cluster is running v22.1.14 or later, set the following session variable using

ALTER ROLE ... SET {session variable}:ALTER ROLE {username} SET copy_from_retries_enabled = true;This prevents a potential issue when migrating especially large tables with millions of rows.

If you are migrating to a CockroachDB Cloud cluster and plan to use replication as part of your migration strategy, you must first disable revision history for cluster backups for the migration to succeed.

Warning:You will not be able to run a point-in-time restore as long as revision history for cluster backups is disabled. Once you verify that the migration succeeded, you should re-enable revision history.- If the output of

SHOW SCHEDULESshows any backup schedules, runALTER BACKUP SCHEDULE {schedule_id} SET WITH revision_history = 'false'for each backup schedule. - If the output of

SHOW SCHEDULESdoes not show backup schedules, contact Support to disable revision history for cluster backups.

- If the output of

Manually create all schema objects in the target CockroachDB cluster. AWS DMS can create a basic schema, but does not create indexes or constraints such as foreign keys and defaults.

- If you are migrating from a PostgreSQL database, use the Schema Conversion Tool to convert and export your schema. Ensure that any schema changes are also reflected on your PostgreSQL tables, or add transformation rules. If you make substantial schema changes, the AWS DMS migration may fail.

Note:All tables must have an explicitly defined primary key. For more guidance, see the Migration Overview.

As of publishing, AWS DMS supports migrations from these relational databases (for a more accurate view of what is currently supported, see Sources for AWS DMS):

- Amazon Aurora

- Amazon DocumentDB (with MongoDB compatibility)

- Amazon S3

- IBM Db2 (LUW edition only)

- MariaDB

- Microsoft Azure SQL

- Microsoft SQL Server

- MongoDB

- MySQL

- Oracle

- PostgreSQL

- SAP ASE

Step 1. Create a target endpoint pointing to CockroachDB

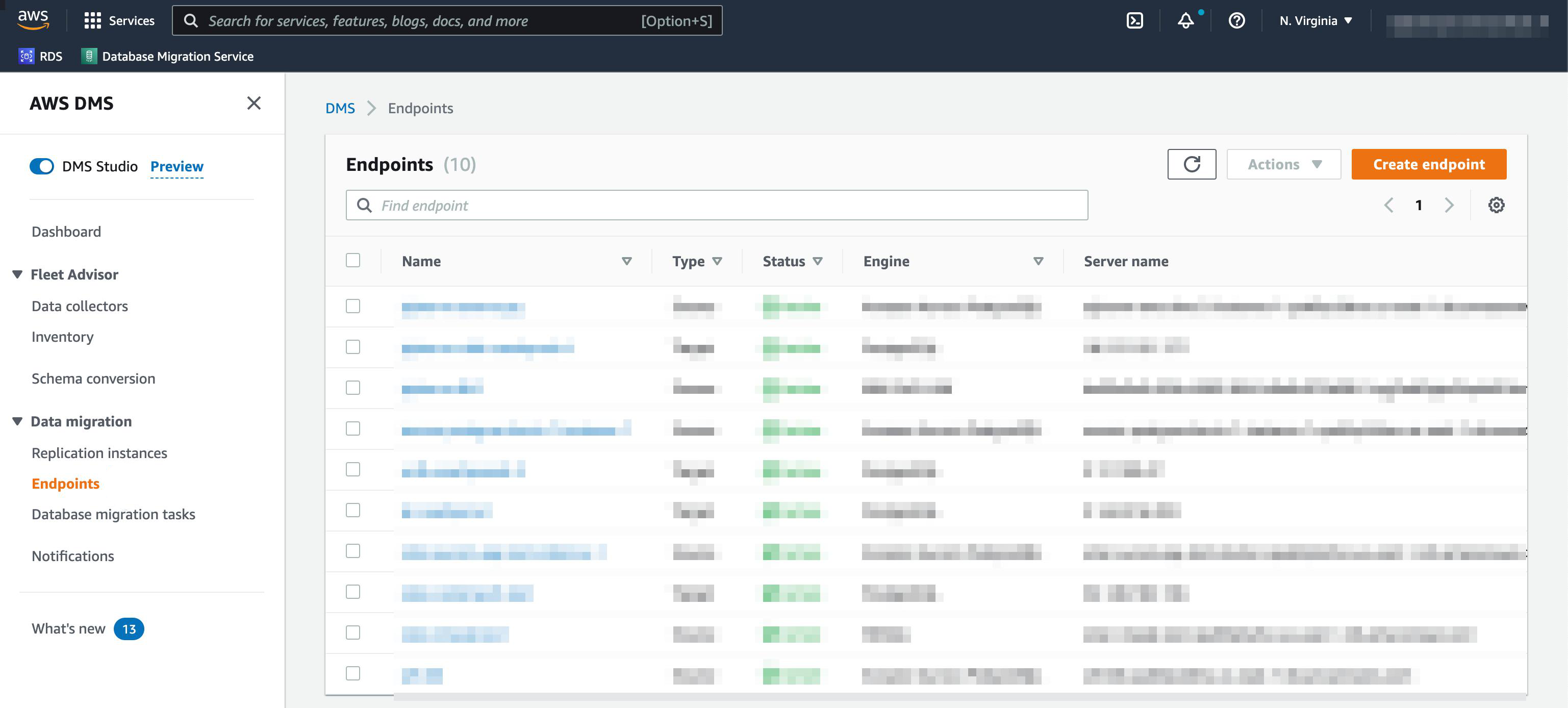

- In the AWS Console, open AWS DMS.

- Open Endpoints in the sidebar. A list of endpoints will display, if any exist.

In the top-right portion of the window, select Create endpoint.

A configuration page will open.

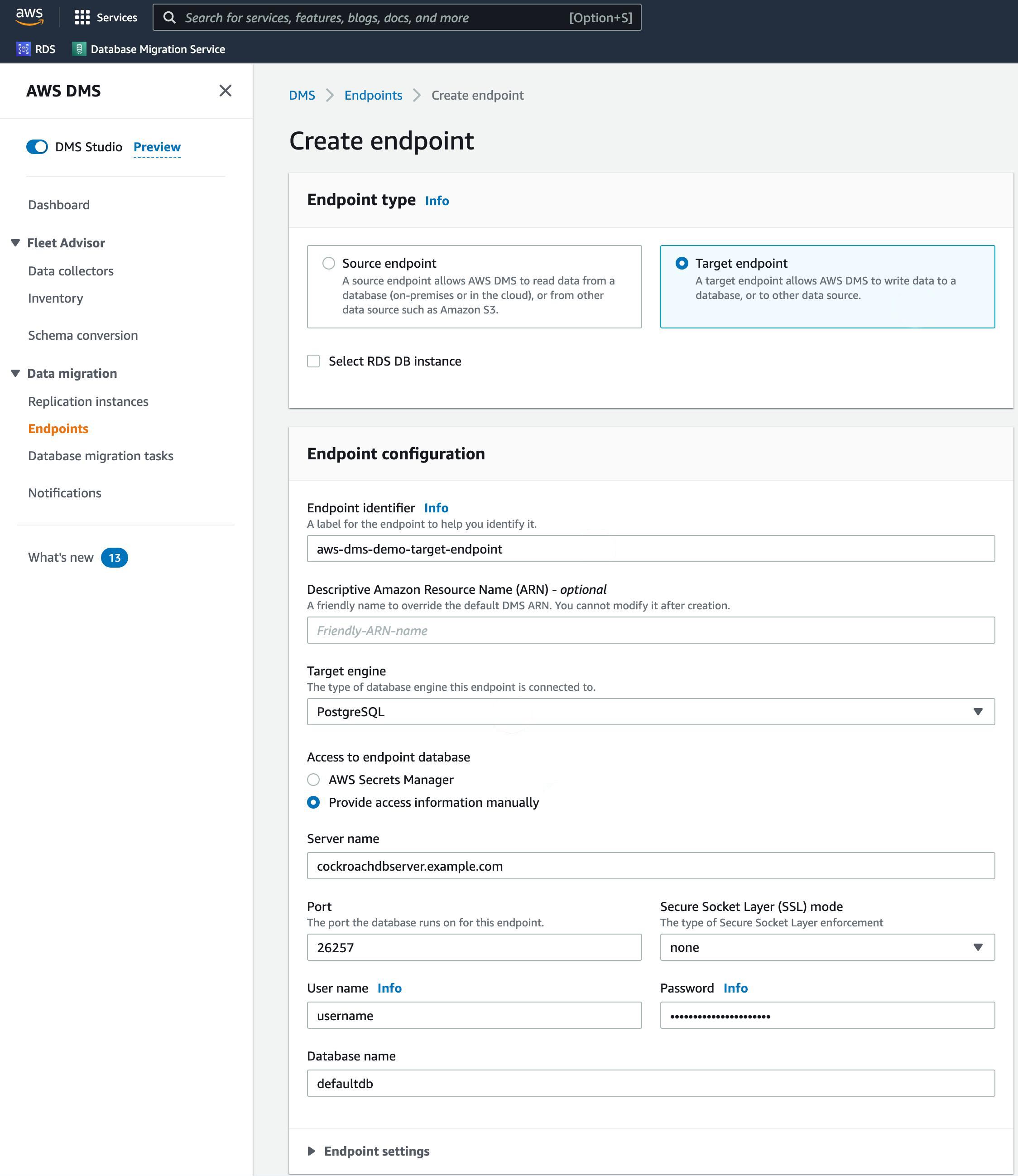

In the Endpoint type section, select Target endpoint.

Supply an Endpoint identifier to identify the new target endpoint.

In the Target engine dropdown, select PostgreSQL.

Under Access to endpoint database, select Provide access information manually.

Enter the Server name and Port of your CockroachDB cluster.

Supply a User name, Password, and Database name from your CockroachDB cluster.

Note:To connect to a CockroachDB Serverless cluster, set the Database name to{serverless-hostname}.{database-name}. For details on how to find these parameters, see Connect to a CockroachDB Serverless cluster. Also set Secure Socket Layer (SSL) mode to require.

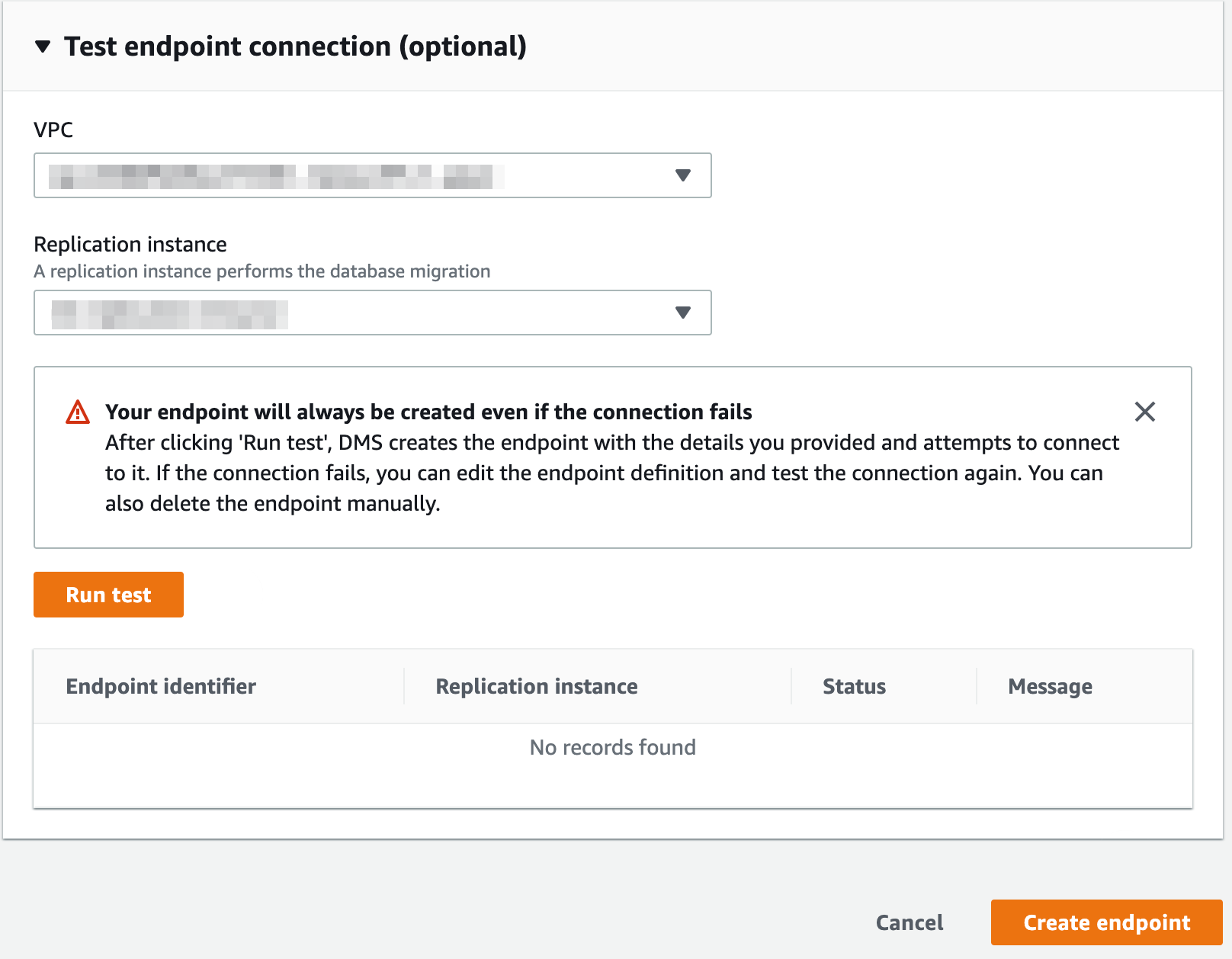

If needed, you can test the connection under Test endpoint connection (optional).

To create the endpoint, select Create endpoint.

Step 2. Create a database migration task

A database migration task, also known as a replication task, controls what data are moved from the source database to the target database.

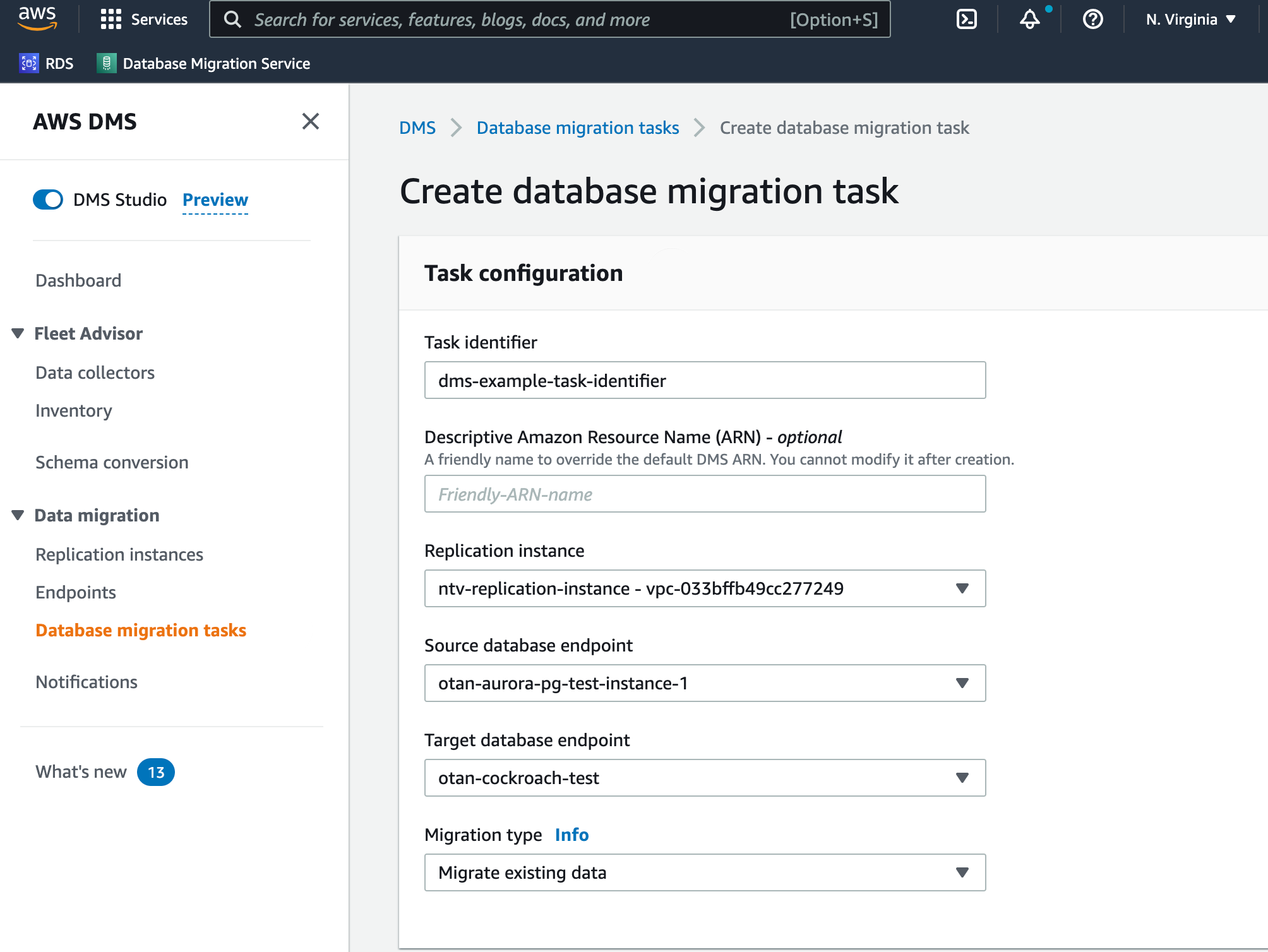

Step 2.1. Task configuration

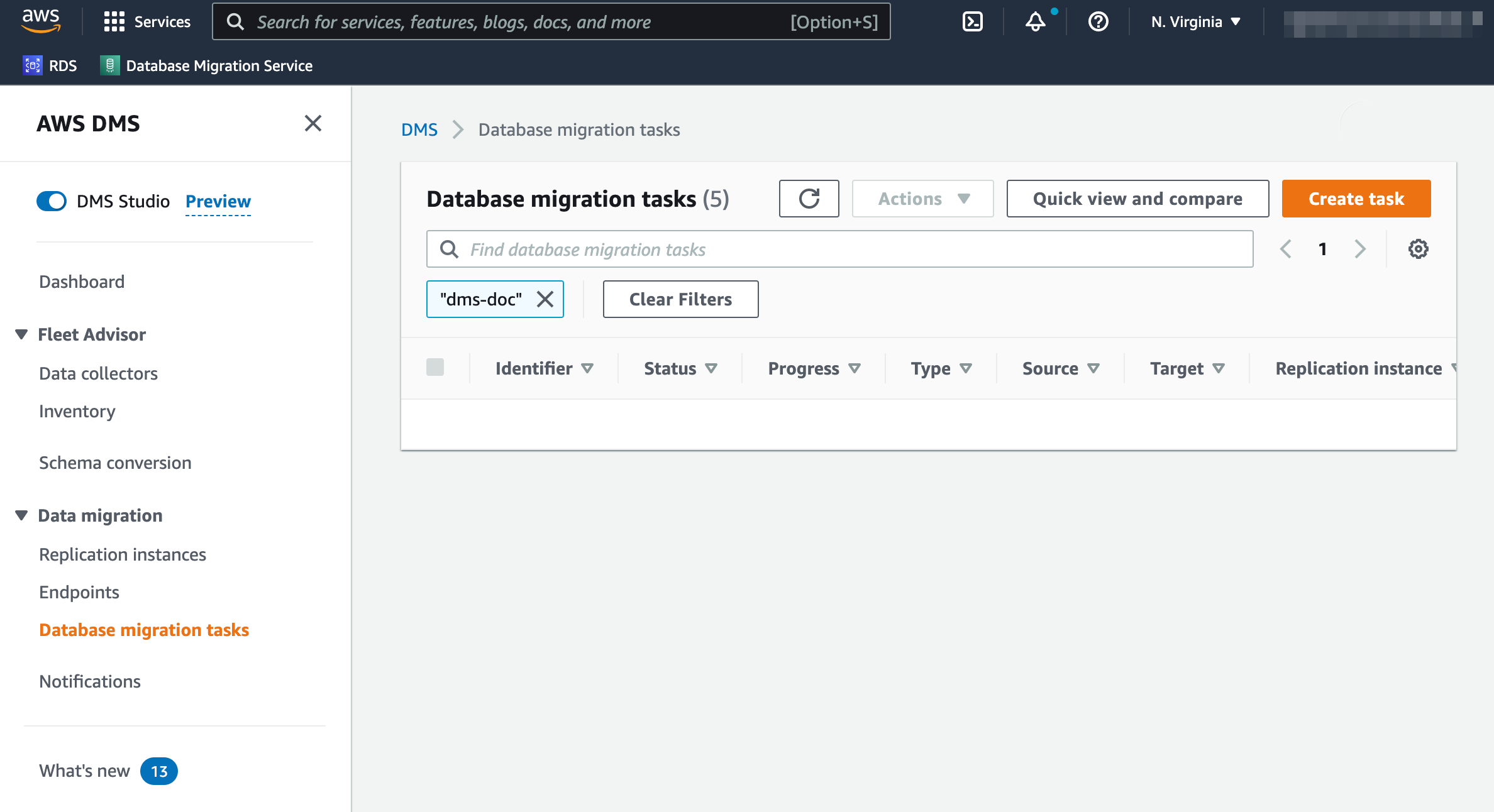

- While in AWS DMS, select Database migration tasks in the sidebar. A list of database migration tasks will display, if any exist.

In the top-right portion of the window, select Create task.

A configuration page will open.

Supply a Task identifier to identify the replication task.

Select the Replication instance and Source database endpoint you created prior to starting this tutorial.

For the Target database endpoint dropdown, select the CockroachDB endpoint created in the previous section.

Select the appropriate Migration type based on your needs.

Warning:If you choose Migrate existing data and replicate ongoing changes or Replicate data changes only, you must first disable revision history for backups.

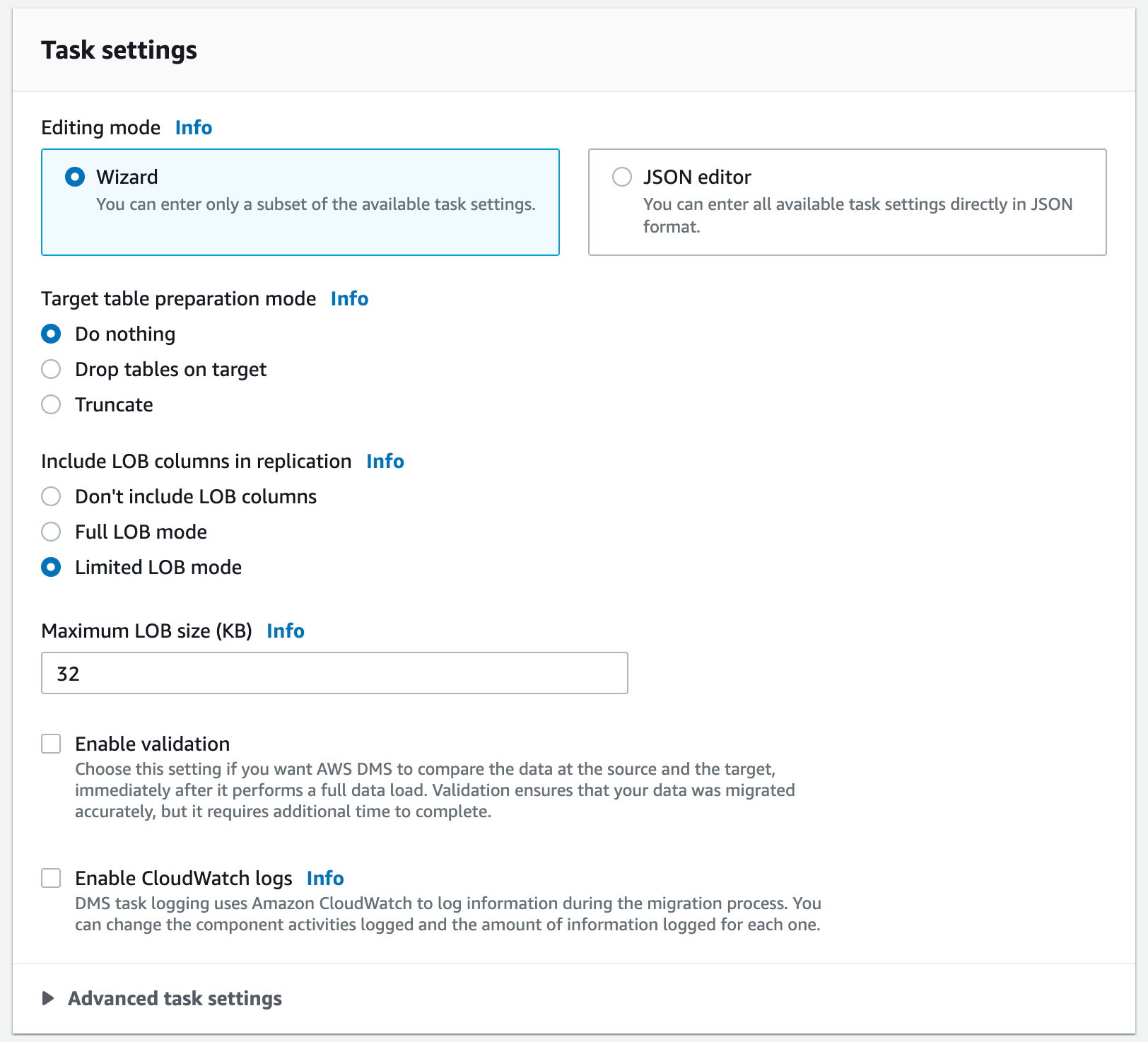

Step 2.2. Task settings

- For the Editing mode radio button, keep Wizard selected.

- To preserve the schema you manually created, select Truncate or Do nothing for the Target table preparation mode.

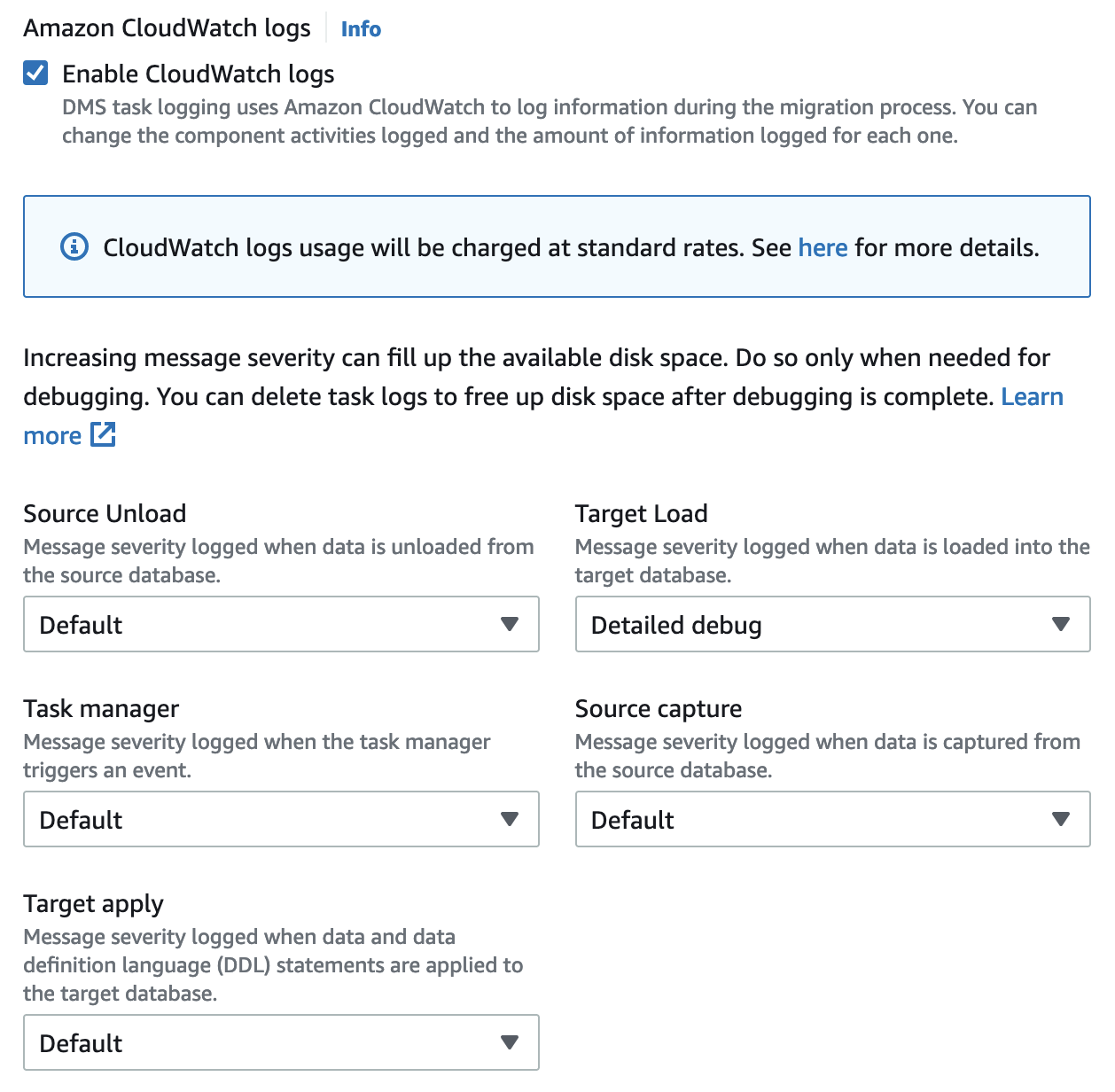

- Check the Enable CloudWatch logs option. We highly recommend this for troubleshooting potential migration issues.

- For the Target Load, select Detailed debug.

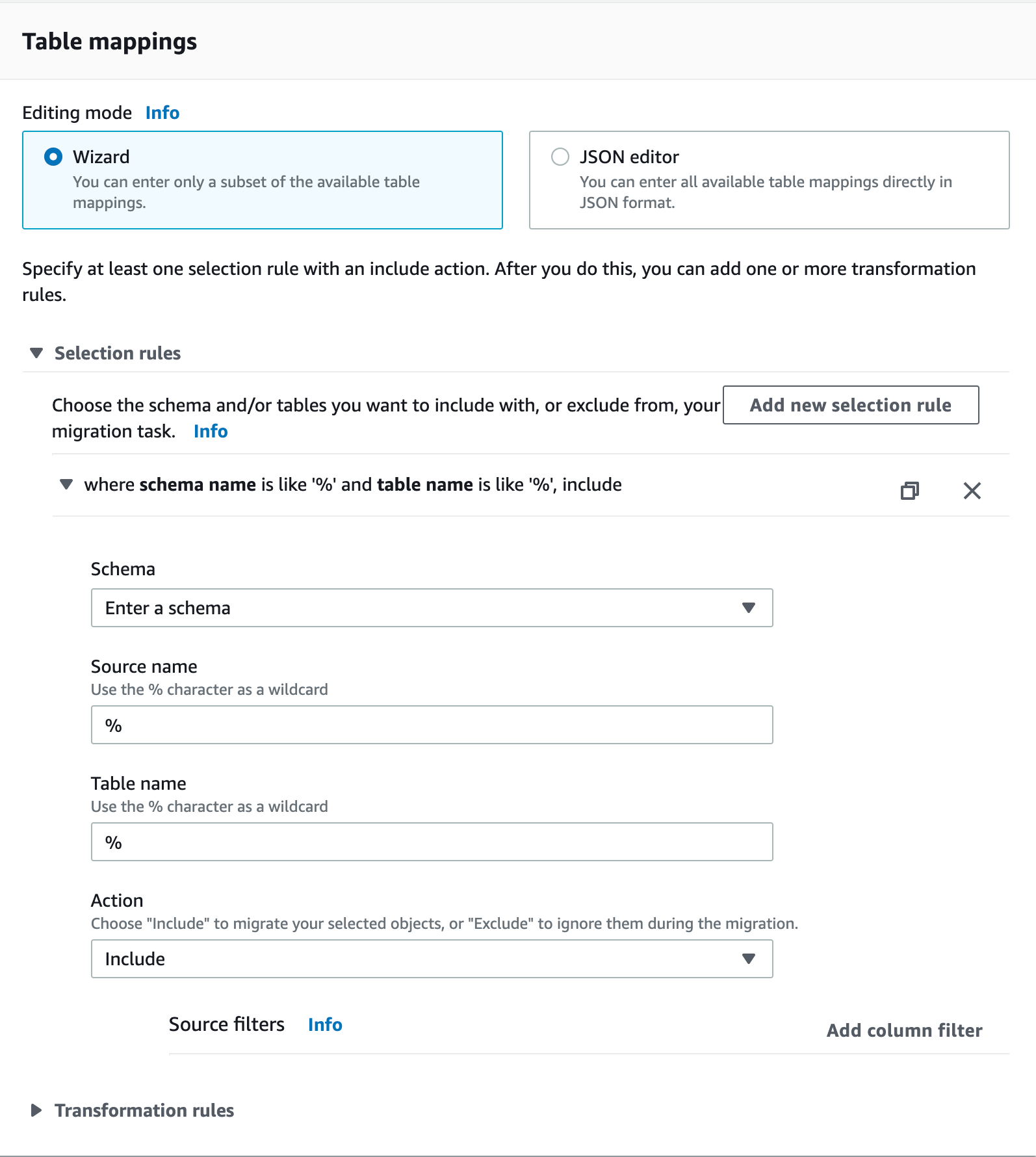

Step 2.3. Table mappings

When specifying a range of tables to migrate, the following aspects of the source and target database schema must match unless you use transformation rules:

- Column names must be identical.

- Column types must be compatible.

- Column nullability must be identical.

- For the Editing mode radio button, keep Wizard selected.

- Select Add new selection rule.

- In the Schema dropdown, select Enter a schema.

- Supply the appropriate Source name (schema name), Table name, and Action.

Use % as an example of a wildcard for all schemas in a PostgreSQL database. However, in MySQL, using % as a schema name imports all the databases, including the metadata/system ones, as MySQL treats schemas and databases as the same.

Step 3. Verify the migration

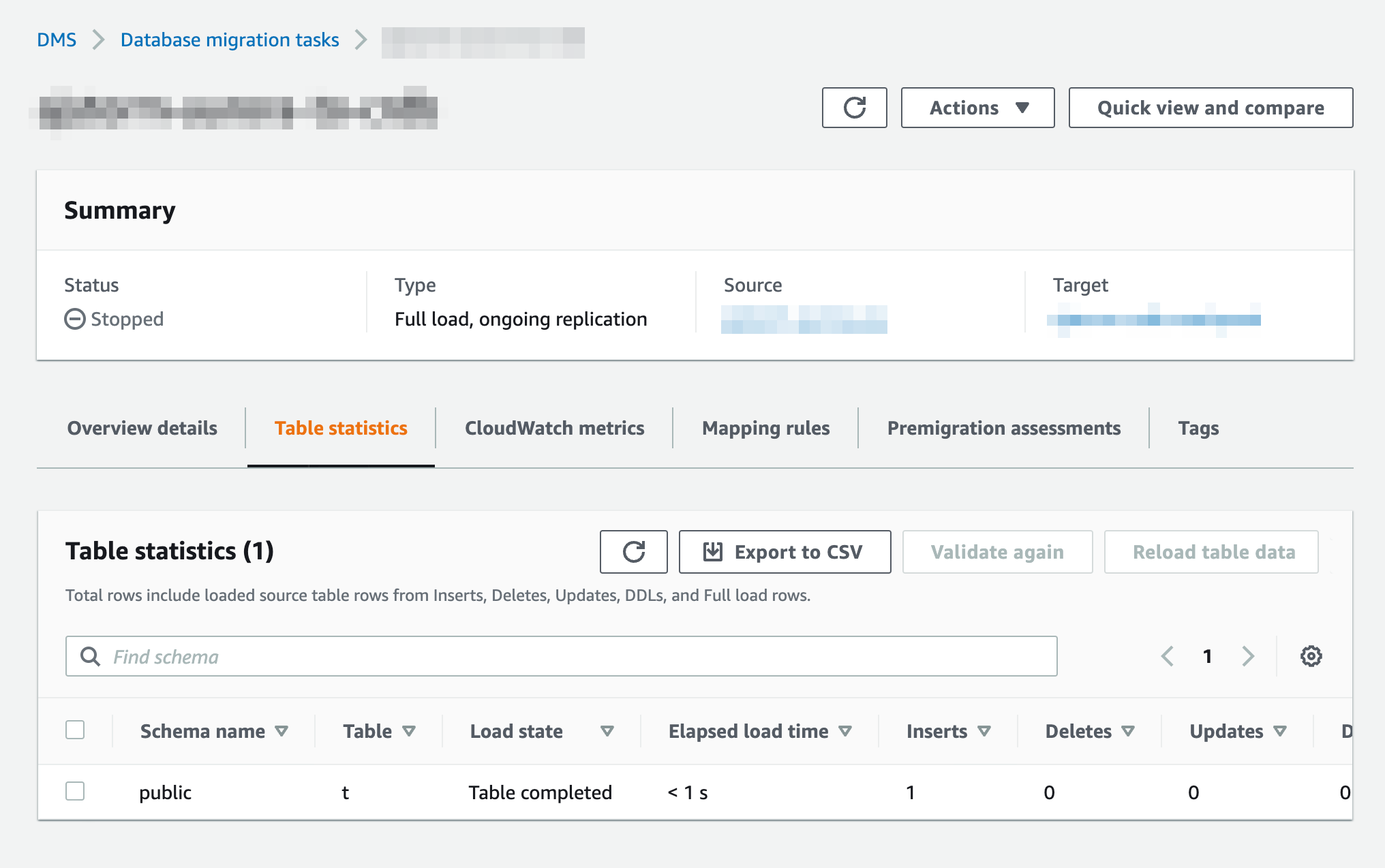

Data should now be moving from source to target. You can analyze the Table Statistics page for information about replication.

- In AWS DMS, open Database migration tasks in the sidebar.

- Select the task you created in Step 2.

- Select Table statistics below the Summary section.

If your migration succeeded, you should now re-enable revision history for cluster backups.

If your migration failed for some reason, you can check the checkbox next to the table(s) you wish to re-migrate and select Reload table data.

Optional configurations

AWS PrivateLink

If using CockroachDB Dedicated, you can enable AWS PrivateLink to securely connect your AWS application with your CockroachDB Dedicated cluster using a private endpoint. To configure AWS PrivateLink with CockroachDB Dedicated, see Network Authorization.

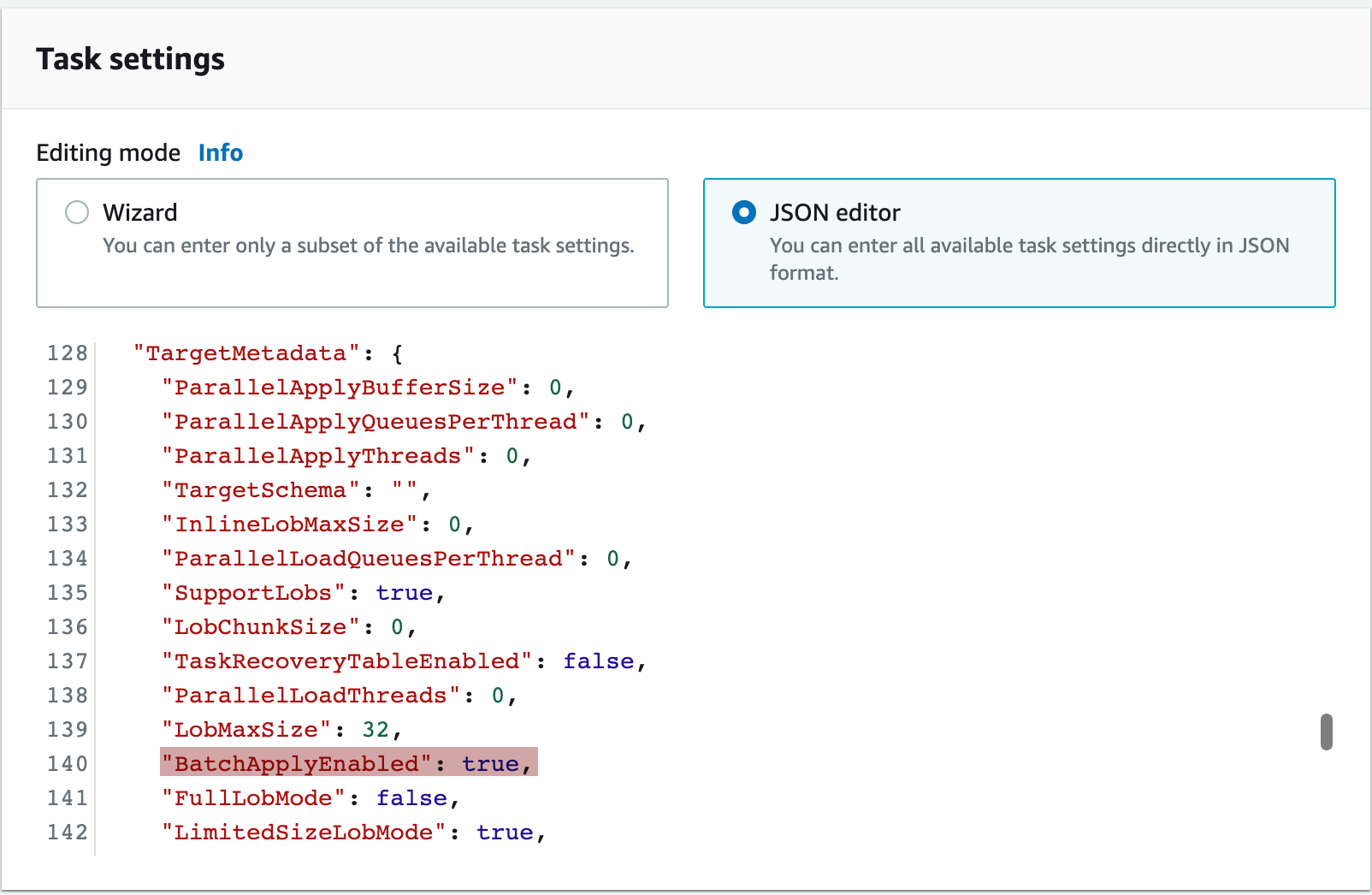

BatchApplyEnabled

The BatchApplyEnabled setting can improve replication performance and is recommended for larger workloads.

- Open the existing database migration task.

- Choose your task, and then choose Modify.

- From the Task settings section, switch the Editing mode from Wizard to JSON editor. Locate the

BatchApplyEnabledsetting and change its value totrue. Information about theBatchApplyEnabledsetting can be found here.

BatchApplyEnabled does not work when using Drop tables on target as a target table preparation mode. Thus, all schema-related changes must be manually copied over if using BatchApplyEnabled.

Known limitations

When using Truncate or Do nothing as a target table preparation mode, you cannot include tables with any hidden columns. You can verify which tables contain hidden columns by executing the following SQL query:

SELECT table_catalog, table_schema, table_name, column_name FROM information_schema.columns WHERE is_hidden = 'YES';If you select Enable validation in your task settings and have a

TIMESTAMP/TIMESTAMPTZcolumn in your database, the migration will fail with the following error:Suspending the table : 1 from validation since we received an error message : ERROR: unknown signature: to_char(timestamp, string); No query has been executed with that handle with type : non-retryable(0) (partition_validator.c:514)This is resolved in v22.2.1. On earlier versions, do not select the Enable validation option if your database has a

TIMESTAMP/TIMESTAMPTZcolumn.If you are migrating from PostgreSQL, are using a

STRINGas aPRIMARY KEY, and have selected Enable validation in your task settings, validation can fail due to a difference in how CockroachDB handles case sensitivity in strings.To prevent this error, use

COLLATE "C"on the relevant columns in PostgreSQL or a collation such asCOLLATE "en_US"in CockroachDB.A migration to a multi-region cluster using AWS DMS will fail if the target database has regional by row tables. This is because the

COPYstatement used by DMS is unable to process thecrdb_regioncolumn in regional by row tables.To prevent this error, set the regional by row table localities to

REGIONAL BY TABLEand perform the migration. After the DMS operation is complete, set the table localities toREGIONAL BY ROW.

Troubleshooting common issues

For visibility into migration problems:

- Check the

SQL_EXEClogging channel for log messages related toCOPYstatements and the tables you are migrating. - Check the Amazon CloudWatch logs that you configured for messages containing

SQL_ERROR.

- Check the

If you encounter errors like the following:

2022-10-21T13:24:07 [SOURCE_UNLOAD ]W: Value of column 'metadata' in table 'integrations.integration' was truncated to 32768 bytes, actual length: 116664 bytes (postgres_endpoint_unload.c:1072)Try selecting Full LOB mode in your task settings. If this does not resolve the error, select Limited LOB mode and gradually increase the Maximum LOB size until the error goes away. For more information about LOB (large binary object) modes, see the AWS documentation.

If you encounter a

TransactionRetryWithProtoRefreshErrorerror in the Amazon CloudWatch logs or CockroachDB logs when migrating an especially large table with millions of rows, and are running v22.1.14 or later, set the following session variable usingALTER ROLE ... SET {session variable}:ALTER ROLE {username} SET copy_from_retries_enabled = true;Then retry the migration.

Run the following query from within the target CockroachDB cluster to identify common problems with any tables that were migrated. If problems are found, explanatory messages will be returned in the

cockroach sqlshell.WITH invalid_columns AS ( SELECT 'Table ' || table_schema || '.' || table_name || ' has column ' || column_name || ' which is hidden. Either drop the column or mark it as not hidden for DMS to work.' AS fix_me FROM information_schema.columns WHERE is_hidden = 'YES' AND table_name NOT LIKE 'awsdms_%' ), invalid_version AS ( SELECT 'This cluster is on a version of CockroachDB which does not support AWS DMS. CockroachDB v21.2.13+ or v22.1+ is required.' AS fix_me WHERE split_part( substr( substring( version(), e'v\\d+\\.\\d+.\\d+' ), 2 ), '.', 1 )::INT8 < 22 AND NOT ( split_part( substr( substring( version(), e'v\\d+\\.\\d+.\\d+' ), 2 ), '.', 1 )::INT8 = 21 AND split_part( substr( substring( version(), e'v\\d+\\.\\d+.\\d+' ), 2 ), '.', 2 )::INT8 = 2 AND split_part( substr( substring( version(), e'v\\d+\\.\\d+.\\d+' ), 2 ), '.', 3 )::INT8 >= 13 ) ), has_no_pk AS ( SELECT 'Table ' || a.table_schema || '.' || a.table_name || ' has column ' || a.column_name || ' has no explicit PRIMARY KEY. Ensure you are not using target mode "Drop tables on target" and that this table has a PRIMARY KEY.' AS fix_me FROM information_schema.key_column_usage AS a JOIN information_schema.columns AS b ON a.table_schema = b.table_schema AND a.table_name = b.table_name AND a.column_name = b.column_name WHERE b.is_hidden = 'YES' AND a.column_name = 'rowid' AND a.table_name NOT LIKE 'awsdms_%' ) SELECT fix_me FROM has_no_pk UNION ALL SELECT fix_me FROM invalid_columns UNION ALL SELECT fix_me FROM invalid_version;Refer to Debugging Your AWS DMS Migrations (Part 1, Part 2, and Part 3) on the AWS Database Blog.

If the migration is still failing, contact Support and include the following information when filing an issue:

- Source database name.

- CockroachDB version.

- Source database schema.

- CockroachDB database schema.

- Any relevant logs (e.g., the last 100 lines preceding the AWS DMS failure).

- Ideally, a sample dataset formatted as a database dump file or CSV.